JUPITER

An AI-based mission planning framework for Navy operations

OUR CORE R&D / These systems range from individual

autonomous robotic platforms to large-scale, multi-agent systems

for information management, command & control

These systems range from individual autonomous robotic platforms to large-scale, multi-agent systems for information management, command & control

To be trustworthy, AI systems must be resilient, unbiased, accountable, and understandable. But most AI systems cannot explain how they reached their conclusions. Explainable AI (XAI) promotes trust by providing reasons for all system outputs—reasons that are accurate and human-understandable.

An AI-based mission planning framework for Navy operations

AI enhanced design collaboration for human creativity while mitigating bias

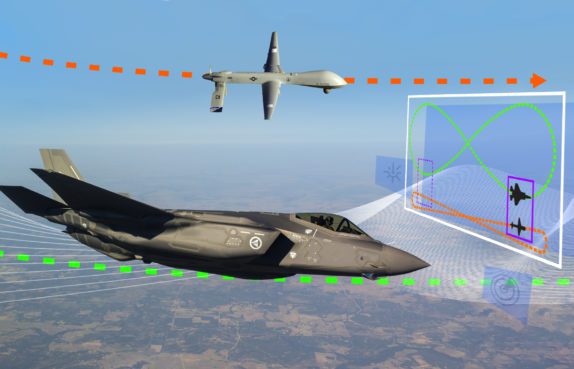

An enhanced crewed–uncrewed team communication prototype for complex settings

Tools that make DRL agents understandable and trustable

Causal models to explain learning