Imagine artificial intelligence (AI) as a teammate, working alongside humans in life or death scenarios—transporting critical medical supplies in combat zones, or analyzing medical images to flag those that need closer examination.

Scientists at Charles River Analytics aren’t just imagining this—they are working to make it happen, through research on competency-aware machine learning (ML). Like other ML systems, competency-aware ML systems learn from experience. But they’re unique in that they also learn about their own strengths and weaknesses, and communicate this knowledge to their human team members.

One of Charles River’s current projects in competency-aware ML is ALPACA (Advancing Learning via Probabilistic Causal Analysis for Competency Awareness). Using autonomous robot navigation as a context, ALPACA is developing and testing a machine learning agent with competency-awareness capabilities. The project is funded by DARPA through their program on Competency-Aware Machine Learning (CAML).

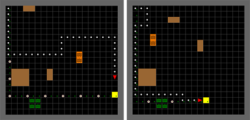

The project team began by experimenting with different ML agents in a MiniGrid environment. MiniGrid is a popular “sandbox” used for rapid developing and testing of AI agents. The agent was trained under two different conditions: slippery surfaces and normal surfaces. On slippery surfaces, the agent learned to take a wide berth around obstacles; on normal surfaces, it took the shortest path.

The next step was to produce an agent in a platform for AI research called AirSim. AirSim is an open-source simulator for drones, cars, and other vehicles. Once the agent is successful within AirSim, it will be transferred to Campus Jackal, a state-of-art robot for navigating outdoor environments. It’s easy to see how the competencies being assessed in ALPACA—obstacle avoidance and route efficiency—apply to self-driving cars and other autonomous vehicles. But the team’s work on competency-aware ML goes beyond solving a specific problem, to foundational research that will help AI systems function as teammates rather than tools.

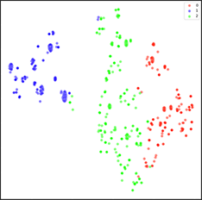

This potential is demonstrated by a recent accomplishment of the ALPACA project, an experiment to answer this question: after training a ML agent to perform a task, could we “discover” the strategies used in training, based solely on the agent’s observed behavior?

The answer was “yes”—and it means that the ALPACA approach can be applied to other ML systems. Once an ML system’s task strategies have been discovered, ALPACA can use probabilistic causal models to generate human-understandable competency statements. As the ML system continues to learn about its competencies and report and explain those competencies to the human partner, trust is built. Ultimately, this trust will enable human-machine partnerships to advance beyond what is possible with current technologies—and beyond our current imaginings.

This material is based upon work supported by the Defense Advanced Research Projects Agency (DARPA) under Contract No. HR001120C0031. Any opinions, findings and conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DARPA.