Meet us at the GEOINT 2025 Conference!

Space domain awareness training tool

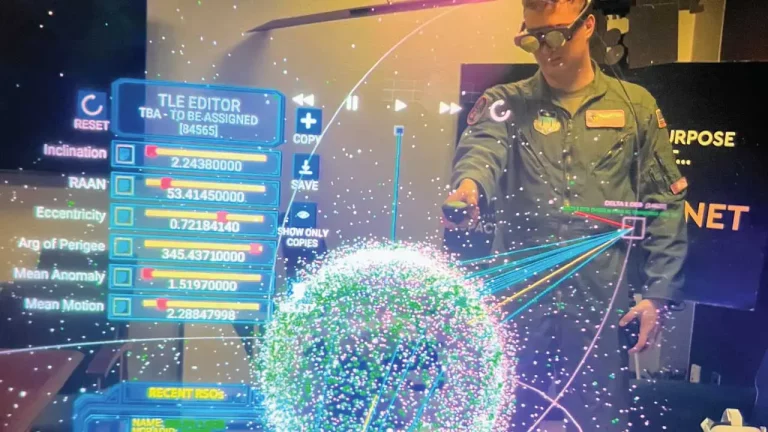

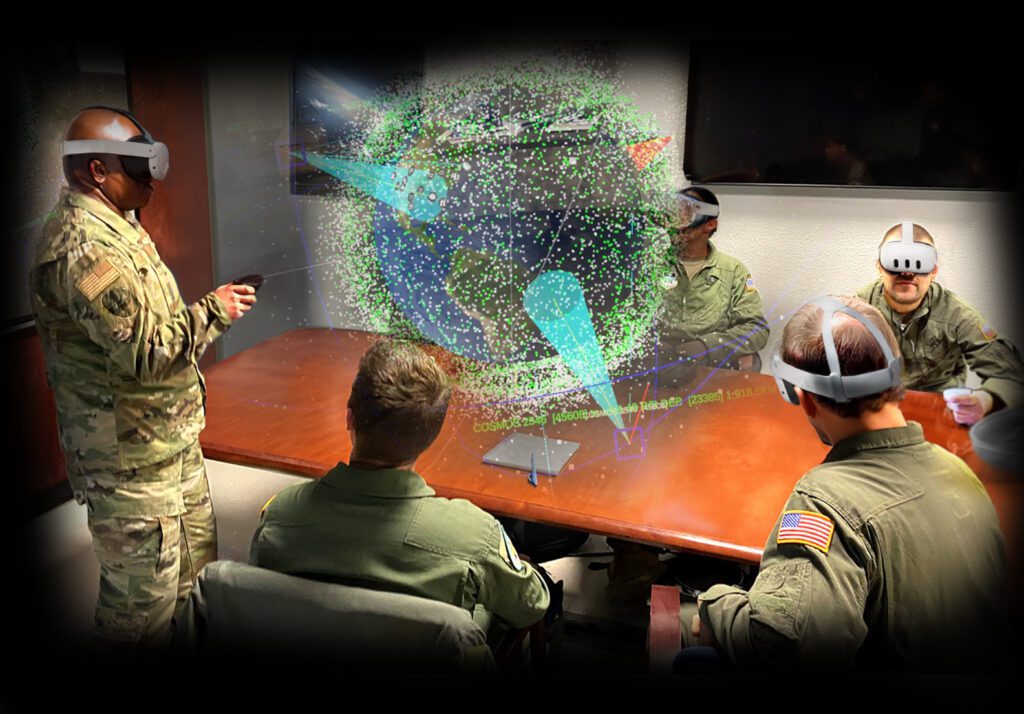

KWYN® SOLAR is an AR/VR space training platform revolutionizing space domain awareness (SDA) through easy-to-understand 3D visualizations, real-time analysis, and detailed information overlays of the space domain.

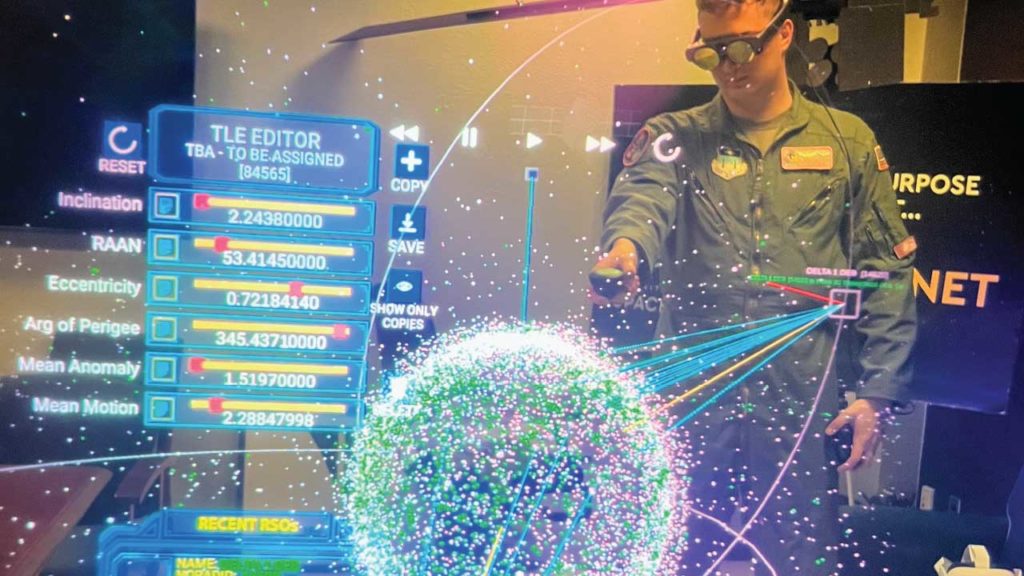

Step into KWYN SOLAR’s advanced models of satellites and other space assets to manipulate objects and their orbits and observe real-time effects. Both new learners and experienced space professionals can gain insights into complex and counterintuitive space concepts, leading to better, more informed decisions for managing and defending our space operations.

Visualize satellite data

As space becomes more congested, accurate and timely SDA is critical for government and commercial satellite operators. New space operators must fully grasp the fundamentals of the space domain, and AR/VR training methods have proven effective to help new operators quickly understand complex scenarios.

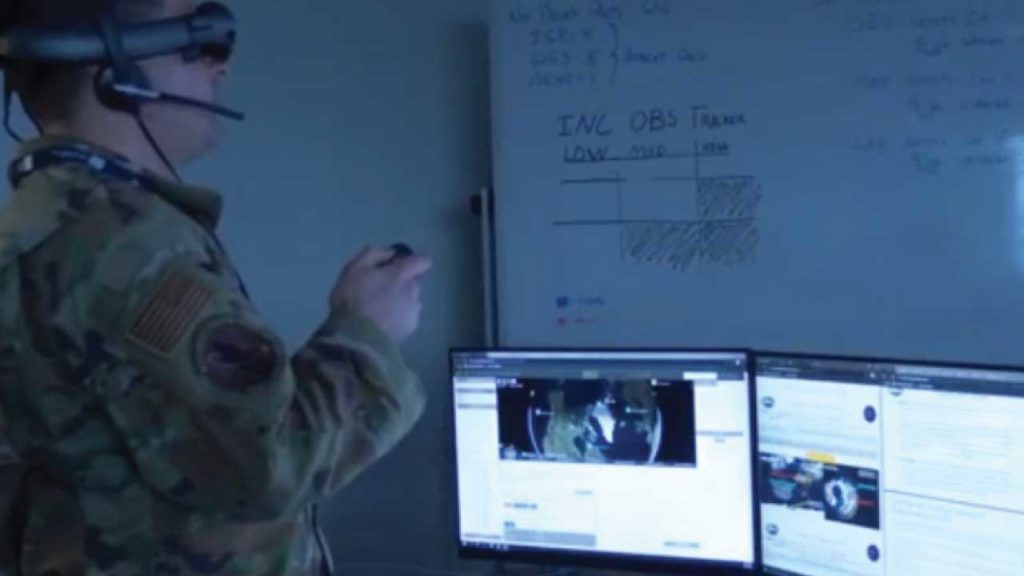

KWYN SOLAR is a mixed reality space training tool that allows users to dive into interactive 4D simulations of space and visualize satellite data, helping them understand relationships between objects in orbit. The platform’s interactive displays make space domain concepts easy to learn and easy to retain.

This space domain AI tool can be tailored to personalized experiences or scenarios and can synchronize across multiple devices in classrooms or network-based collaborations.

Augmented reality

space training

Government and university customers are using KWYN SOLAR to accelerate learning of orbital dynamics concepts to better train for executing US military operations and command and control (C2) space battle management.

Real-world deployments. KWYN SOLAR is used by the US Air Force Academy in both cadet training and operational units such as Space Delta 13’s Operations Squadron.

Tailored, effective, and human-centered design

Immersive AR/VR training platform.

KWYN SOLAR leverages advanced AR and VR technologies to provide an immersive educational experience in the space domain, enabling users to interact with and visualize complex space interactions in four dimensions.

Advanced visualizations.

Offers 3D visualizations, filtering, and annotation tools to enhance understanding of satellite field of view, satellite communications operations, and proximity-based conjunction assessments.

Customized and compatible.

KWYN SOLAR offers personalized experiences tailored to individual needs, informed by human factors expertise. It is compatible with multiple AR/VR headsets, including Magic Leap, Meta Quest, and HTC VIVE.

KWYN SOLAR key benefits

Improved SDA and decision-making

Integrates satellite ephemerides into actionable intelligence with real-time 3D visualizations and data analysis to support rapid SDA and decision-making.

Contextual metadata overlays

Provides synchronized data overlays that give context based on user access level, supporting both secure and intuitive understanding of satellite constellations.

Space science empowerment

Facilitates exploration of complex math, astrodynamics, and new space architectures, empowering students and researchers to explore new frontiers and publish impactful findings.

Proven enhanced efficiency

The platform is validated by cognitive evaluation teams to reduce cognitive load, thus enhancing learning and retention. Institutions such as the US Air Force Academy are using KWYN SOLAR to train cadets for Space Force careers.

Core educational content and tools

Supports physics-based models and intuitive interactions, enabling advanced 3D exploration of satellites and space operations.

Secure licensing

Offers secure data handling and accredited hardware with operational scenarios integrated with tactics, techniques, and procedures (TTPs).

See how KWYN SOLAR works

KWYN SOLAR immerses you in the current space picture, using leading-edge AR/VR technology to help you gain a deeper understanding of real-time 3D and 4D space concepts.

Team insights: What drives our work

Director of Space and Airborne Systems

UX Innovation Division

Director of Program Transition

i5 STRAT features KWYN SOLAR

i5 STRAT (Space Training, Research, Advancements, and Technology Talks) aims to provide i5 members across the nation with access to innovators in the space domain. This includes CEOs, astronauts, Space Force members, and other space-minded individuals who share their unique insights and perspectives on space-related topics.

In this episode, John Stevenson, a Martinson Scholar at the United States Air Force Academy, interviews Rob Hyland and Dr. Susan Latiff from Charles River Analytics about their mixed reality space domain awareness tool.

From the press

Space Explored, Space Force modernizing training with mixed reality for the competitive space domain

Blue Ribbon News, Rockwall area space scientist shares powerful virtual reality teaching tool with local cadets

In the news

Meet the team!

Daniel Stouch

Space and Airborne Systems

Rob Hyland

Director of Program Transition

Dr. Susan Latiff

User Experience Scientist

UX Innovation Division

Patrick Hosman

Game Design Engineer

Human-Centered AI

Dan Duggan

XR Software Engineer

UX Innovation

Start using KWYN SOLAR today!

Secure data handling, accredited hardware, TTP-integrated operational scenarios

The appearance of U.S. Department of Defense visual information does not imply or constitute DOD endorsement. Depictions of people in military uniforms were partially generated with Adobe AI for privacy protections.