Scruff™

A framework for building

artificial intelligence systems

A framework for building artificial intelligence systems

Making AI easier

Most modeling languages assume that only one kind of component–either constraint systems or probabilistic methods–describes an entire landscape.

AI reflects the real world, which is not always neatly described. Because there’s no one-size-fits-all solution for everything that an AI system needs to do, we need to use appropriate techniques for different aspects of the system. Depending on uses, model components such as those for perception, inference, learning, and planning can be mixed and matched.

These piecemeal components need to be organized coherently in an overarching modeling framework. This compositional framework is what Scruff™ from Charles River Analytics delivers.

“Scruff allows you to integrate many different kinds of model components—physics, probabilistic, and neural networks—within a single model.”

Dr. Sanja Cvijic

Senior Scientist and Division Director, Human-Centered AI

AI development

challenges

Without Scruff, AI development runs into problems.

It’s either:

- Neat without being able to accommodate many different kinds of models

- Scruffy by accommodating all models but in a way that is not easily explainable

The benefitof Scruff

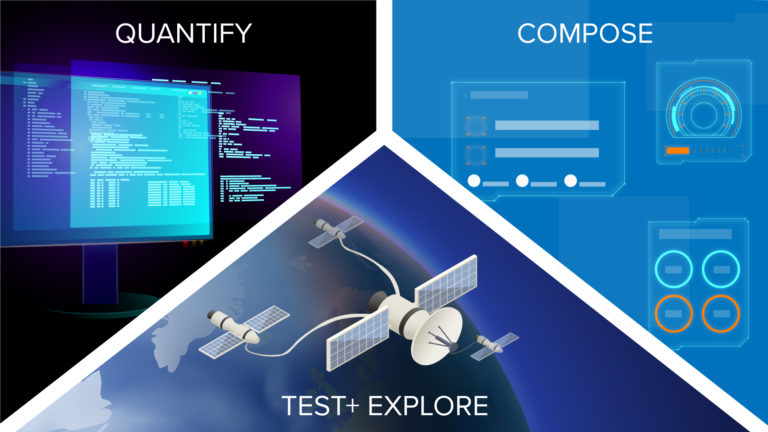

Open-source Scruff allows you to:

- Build explainable AI models that can be experimented with

- Create a coherent framework so you can easily find and interchange components

- Democratize the creation of AI

- Enhance capabilities in developing AI systems, particularly systems that make decisions in the face of uncertainty

The role of probabilistic programming

A probabilistic model is one with a set of variables, where each variable has its own definition and tendencies. Each variable is defined in terms of other variables to make a network.

Probabilistic programming takes complex probabilistic models and makes them simple, expressible, and controllable. Such probabilistic programming is one of the key foundations of Scruff; it can easily describe a number of physical phenomena and allows developers to quickly create probabilistic models. Probabilistic programming unifies all representations in a single framework and makes them more understandable.

Scruff applies the same principle to other kinds of AI components, so users don’t need a special-purpose model to model any given combination of components. The general framework does the job efficiently and users can slot the components in as they need them.

Key features of Scruff

Predictive processing is reasoning about dynamic systems by predicting what to expect and then reconciling the errors.

Scruff allows users to represent hierarchical dynamic models in which each layer of the model predicts the layer below and then processes errors to the layer above. The models have many layers of abstraction, with a chain of predictions down the hierarchy and a chain of error processing up the hierarchy.

Scruff also allows the flexible instantiation of variables at different time points. Users do not have to model everything in lockstep at the same discrete time step. Instead, users can instantiate variables whenever they need to. In predictive processing, higher-level variables in the hierarchy will tend to evolve more slowly and lower-level variables will tend to do so more quickly.

Implicit representation means that every model component is interpreted as a generative probabilistic model, even if it is not one. Under this approach we stipulate that the components support various operations that relate to each other in the same way they would if actually using a generative probabilistic model.

The principles work for all kinds of models. For example, a physics space model that uses differential equations can be interpreted as probabilistic by looking at the propagation of the differential equations. Given the final state, we can perform back inference of the initial state through these differential equations. A data-driven model like a neural network is yet another example, where we need to be able to take an output and get a probability distribution of the inputs. Constraint models with hard and soft constraints can also conform to this methodology.

Such an approach corrals all models under one umbrella and breaks down components into more readily manipulated units with predictable behaviors.

Every model component using implicit representation is represented as a stochastic function, which means that it takes inputs and probabilistically or stochastically generates an output. The approach is based on principles derived from probabilistic programming, where you describe basic functions and use them to build more complex models.

Stochastic functions just describe model components. Variables actually associate these model components with things in the real world, especially ones that vary over time and space. An instantiation is a particular instance of the variable at a particular point in time and space. A number of these instantiations can be used to fully describe a situation.

The advantage of such flexibility, especially compared with fixed time interval models, is that you can base your instantiation of variables only when you access evidence. Since different variables evolve at different rates, the instantiation can be made granular: You can instantiate frequently changing variables frequently and slowly changing variables more rarely.

Scruff in action

Scruff is a powerful building block for Charles River’s leading-edge work in crafting complex AI systems. Many of our signature AI initiatives involve integrated systems made up of multiple components. To be truly versatile, they require the overarching framework that Scruff provides.

OPEN

An AI-driven system forecasting critical mineral supply, demand, and global trends

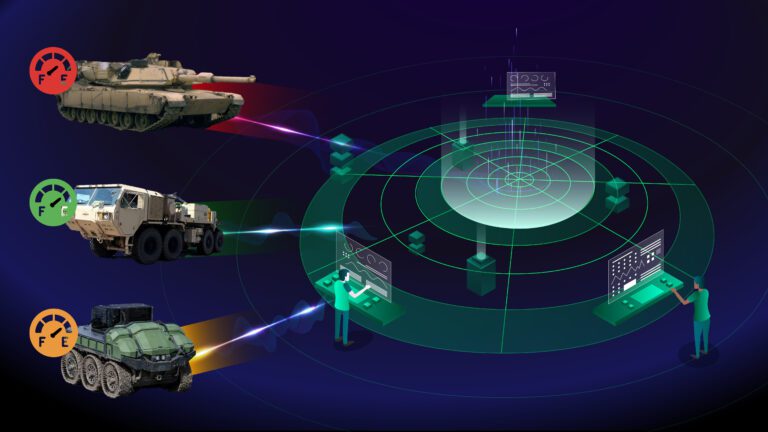

KAML

Real-time combat logistics platform estimating ammunition consumption and resupply needs

READI

A risk-assessment tool for installation security decision-making

HIEROPHANT

A tool integrating hierarchical protocols with command-and-control of uncrewed systems

PRESCRIBE

A tool to assess mental health through preconscious responses

POWERED

An application that uses probabilistic modeling to determine the reliability of power supplies

STAPLES

Using probabilistic programming to enhance supply chain and logistics operations

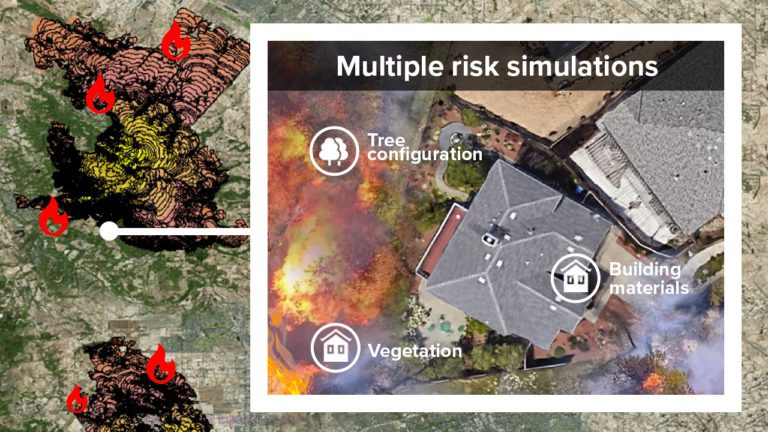

WIMPLE

A wildfire risk assessment and mitigation solution for homeowners and communities

PERCEPTS

A tool to enable more robust AI model development

PSI-Coach

Combining probabilistic programming with cognitive models to predict human behaviorsAdvancing the field of probabilistic programming

Charles River Analytics is a pioneer in the field of probabilistic programming. Former Chief Scientist Dr. Avi Pfeffer developed the first general purpose probabilistic programming language in 2001; since then, he has developed another probabilistic programming language, Figaro™, published numerous papers, presented at invited talks, and authored a textbook, Practical Probabilistic Programming.

We introduce Scruff, a new framework for developing AI systems using probabilistic programming. Scruff enables a variety of representations to be included, such as code with stochastic choices, neural networks, differential equations, and constraint systems. These representations are defined implicitly using a set of standardized operations that can be performed on them.

The theory of predictive processing encompasses several elements that make it attractive as the underlying computational approach for a cognitive architecture. We introduce a new cognitive architecture, Scruff, capable of implementing predictive processing models by incorporating key properties of neural networks into the Bayesian probabilistic programming framework.

In this invited keynote, Dr. Avi Pfeffer describes the unique capabilities of probabilistic programming languages (PPLs) and the development of two PPLs at Charles River: Figaro and Scruff.