Autonomous underwater vehicles are often deployed to clear an area to make sure it’s safe to transit and explore. However, until recently, AUVs weren’t so autonomous. They were more just “playing fetch”—collecting sonar data and returning it to the surface to be manually reviewed, georegistered, and analyzed by scientists. Then the crew would need to send the AUV back down to look more closely at objects of interest that they identified in the raw sonar data.

The Solution

Manually processing the sonar data is time-consuming and error-prone, and Arjuna Balasuriya, Senior Scientist at Charles River Analytics, thought he could solve this problem. “Some of our U.S. Navy customers were spending days to run a single mission,” he said. “All that time increases the risk of detection for covert missions or can put a crew into danger longer in rough seas. We realized that if we could detect objects accurately in noisy sonar data, and pass this information along to an AUV’s autonomy software (its “brain”), it could make mission-shortening decisions, like rerouting for a closer look at an interesting (or suspicious) object.”

For example, for U.S. Navy teams using the tech behind Charles River Analytics’ latest solution, AutoTRap Onboard™, one deployment of an AUV could execute multiple steps of the search-detect-classify-reacquire chain, turning three days of missions into one night.

Deep Learning for Object Detection

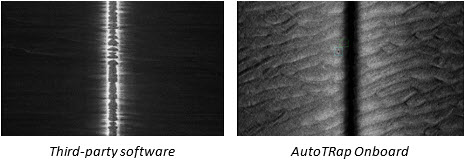

AutoTRap Onboard applies recent advances in deep learning object detection to detect a wide range of objects in confusing and difficult-to-interpret sonar data. The latest version of AutoTRap Onboard also includes algorithms developed by Charles River Analytics that directly generate much clearer sonar pictures from the raw data than the leading third-party software.

AutoTRap Onboard’s image generator delivers much higher fidelity seafloor images from raw sonar data.

Charles River Analytics has partnered with Teledyne Gavia to develop and test AutoTRap Onboard. Version 1.0, released last year, was tested in the Boston Harbor and off the coast of Iceland. Results included a 90% detection rate on truncated conical objects on a rocky volcanic seafloor, but with a false positive rate that needed addressing.

In addition to having much clearer sonar pictures as input, Version 1.1 has an expanded dataset from further test missions in the North Sea, Mediterranean, and Boston Harbor. AutoTRap Onboard needed more diverse geography in its dataset on which its neural networks are trained to better its performance in varied operating environments.

“Between the additions to the training dataset and direct access to the raw data, we expect a significant reduction in false positive rates,” said Balasuriya, looking forward to results from testing due in mid-May.

AutoTRap Onboard’s architecture is also designed to accommodate different imaging sensors—side scan, synthetic aperture, and new types of forward-looking sonar—and can run on any vehicle platform.

AutoTRap Onboard is the latest technology to transition out of the lab at Charles River Analytics, a company regularly recognized for its success solving near-intractable problems for its government clients.

Learn More

The Journey to Helping AUVs Think: How Marine Roboticists are Turning AUV Sight into Perception

AutoTRap Onboard featured at Virtual AUVSI Xponential—Come See Us!

Related Articles

Automated Target Recognition Options for Gavia Vehicles

AUVs Making Real-Time Decisions