Following a successful proof of concept, Charles River Analytics was awarded more than $1.1 million by the US Navy to apply computer vision and autonomous robotics capabilities to navigation over the open ocean.

Images of the ocean can be captured by sensors on uncrewed aircraft systems (UASs), which also record data about position, orientation, and field of view. But it is not easy to match the images and data to exact locations and create accurate maps. Unlike ground terrain, the ocean surface lacks reliable landmarks. GPS data is not always available.

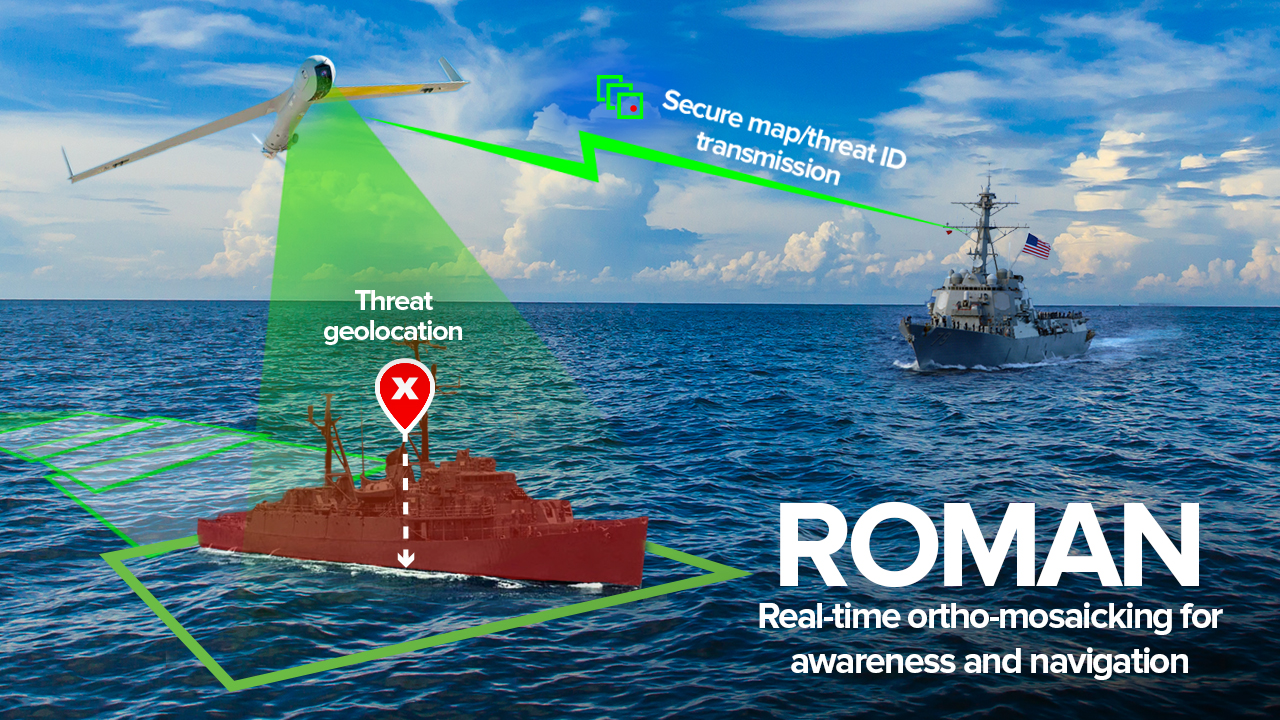

To address these challenges, Charles River Analytics is creating ROMAN (Real-Time Ortho-Mosaicking for Awareness and Navigation). ROMAN is a set of software components for creating real-time sea surface maps and detecting maritime objects.

“The open ocean makes up seventy percent of the planet,” said Dr. Todd Jennings, Scientist at Charles River and Principal Investigator on the ROMAN project. “Keeping up-to-date maps over the open ocean and for aircraft to navigate without the aid of vulnerable external guidance information like GPS presents unique challenges. This project will help provide up-to-the-minute maps and navigational information in contested environments.”

ROMAN ingests video from the electro-optical and infrared sensors onboard a UAS. Then, video frames are stitched together to create and continuously update an ortho-mosaic. An ortho-mosaic is a detailed, accurate, photorealistic map, formed by combining multiple images and geometrically correcting them. Even when GPS data is unavailable, ROMAN’s algorithms will match the UAS sensor data to a base map and provide accurate positioning information. ROMAN will also detect, identify, and track relevant maritime objects, such as submarines and floating mines. A full-featured user interface, integrated with existing Navy communications infrastructure, will support route planning and execution. A particularly innovative aspect of ROMAN is the ability to produce the ortho-mosaic without lengthy post-processing of images or access to cloud computing.

ROMAN will improve situational awareness for maritime operations, including threat detection, anti-submarine warfare, counter-surveillance, and search-and-rescue in GPS-denied areas. Other potential applications include marine life surveys, environmental monitoring, and disaster management. ROMAN technologies will also be incorporated into VisionKit®, Charles River’s library of computer vision components.

Contact us to learn more about ROMAN and our capabilities in robotics and autonomy.

This material is based upon work supported by the Naval Air Warfare Center under Contract No. N68335-21-C-0390. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of NAVAIR. Distribution Statement A – Approved for public release; distribution is unlimited.