The Situation

A key problem for an autonomous mobile robot is determining its position in its environment. Robots are typically equipped with a wide array of sensors, each with its own error characteristics. By fusing the data from each of these sensors, the robot can determine its location with a high degree of accuracy.

The Robot Operating System is a popular open source robotics framework that has a multitude of high-quality software packages that allow for rapid development of robotic systems. Unfortunately, it lacks a general-purpose solution for fusing data from an unlimited number of sensors.

The Charles River Analytics Solution

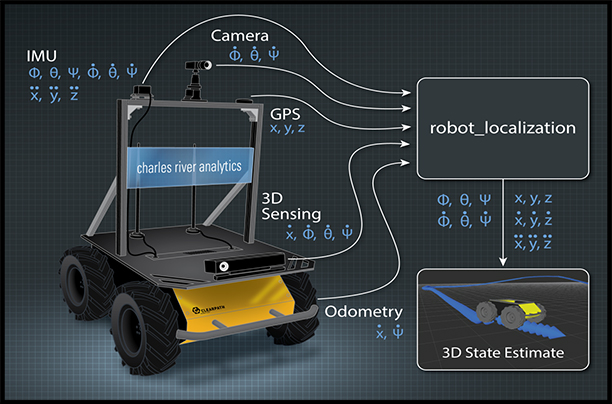

Robotics experts at Charles River Analytics developed the open source robot_localization software package to estimate the 3D position and orientation of autonomous mobile robots. The package fuses data from multiple sources, such as wheel encoders, inertial measurement units (IMUs), cameras, and global positioning system receivers (GPS). This information is used by higher-level autonomy software packages, such as those that carry out route planning, control, mapping, and other specialized functions. We wrote our software from the ground up to be as widely applicable as possible. In addition to allowing for an unlimited number of sensor inputs, robot_localization also includes multiple sensor fusion algorithms, providing increased flexibility for end users with varying requirements.

robot_localization fuses position and orientation information from an unlimited number of sensors to produce an accurate position estimate

The Benefit

As robots become more ubiquitous in our everyday lives, developers will need to spend more time focusing on specialized, high-level intelligence, and will rely on existing software and technologies to provide robust, accurate positioning to support those behaviors. By designing robot_localization to support a multitude of sensors, we ensured it can be immediately integrated with a wide array of mobile robotic platforms operating across a diverse set of operational domains. Roboticists from around the globe have successfully integrated it into unmanned underwater vehicles (UUVs), unmanned ground vehicles (UGVs), and unmanned aerial vehicles (UAVs).