Our CAMEL approach supports dialogue between humans and artificial intelligence systems.

“In CAMEL, we use causal modeling to identify which factors most influence the output of complex, AI-driven systems. Then, our intuitive UI adds text and visual explanations to the model so users can understand why it produced the output, and whether it is appropriate or not to trust that output.” – Dr. Jeff Druce, Senior Scientist

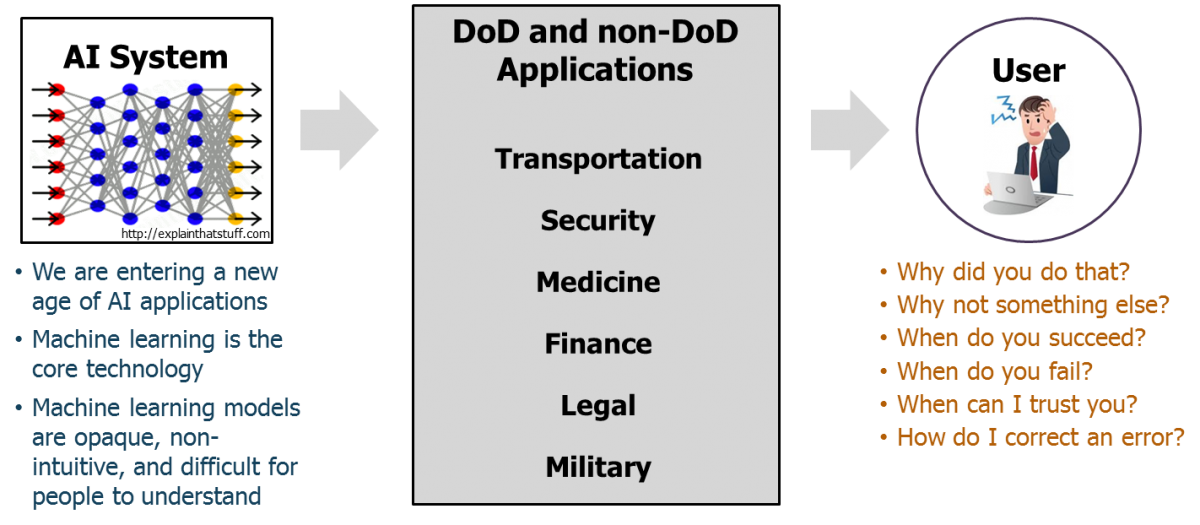

The Department of Defense (DoD) views human-machine teaming as vital to future operations. However, current artificial intelligence (AI) systems cannot explain how they reached their conclusions. As machine learning becomes integral to these cohesive systems, the need for AI to effectively communicate with human team members will become more important.

Dialogue with AI systems

DARPA’s Explainable Artificial Intelligence (XAI) effort aims to create a human-machine dialogue with AI systems. Under XAI, Charles River Analytics led a team that included Brown University, the University of Massachusetts at Amherst, and Roth Cognitive Engineering. The team developed probabilistic causal modeling techniques and an interpretive interface that enable users to naturally interact with machines. Our Causal Models to Explain Learning (CAMEL) approach simplifies explanations of how these complex, deep learning machines work.

The Need for Explainable AI (Image courtesy of DARPA)

Strengthening Human-Machine Trust

CAMEL’s explanations are based on causality, a concept that is critical to creating explanations that humans understand. Causal models explain machine learning techniques so users can correctly interpret the results from complex and increasingly mission-critical AI systems.

Learning causal models of such a complex domain is a challenging and currently unaddressed problem. CAMEL unifies causal modeling with the new field of probabilistic programming to create a novel framework that explains machine learning techniques for data analysis and autonomy systems using causal inference.

Broadening the Applicability of CAMEL

CAMEL will significantly impact the way that machine learning systems are deployed, operated, and used inside and outside the DoD. Users of mission-critical systems will be provided with the rationale behind AI conclusions and can request more detailed explanations. For decision-makers faced with life or death situations, these explanations are vital to effective interpretation and application of recommendations, and are an increasingly necessary requirement from government institutions.

This research was developed with funding from the Defense Advanced Research Projects Agency (DARPA). The views, opinions and/or findings expressed are those of the author and should not be interpreted as representing the official views or policies of the Department of Defense or the U.S. Government.