PAL

Human-robot interface to build trust

and interpret speech, gestures, and gaze

Human-robot interface to build trust and interpret speech, gestures, and gaze

Person Aware Liaison (PAL)

Collaborative robots are gaining traction, but for them to become true working partners, they must first bridge the gaps in trust. Building trust starts with realizing that human communication involves more than just speech—it includes body language, hand gestures, and other nonverbal cues.

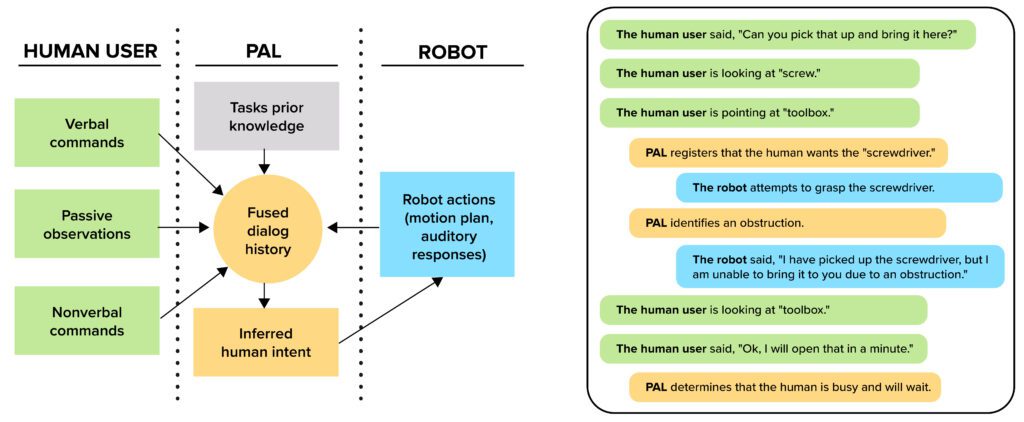

To build a truly collaborative robotic application, PAL is designed to integrate both verbal and nonverbal human inputs to guide robotic actions and foster trust.

Multimodal understanding for safe and reliable human-robot interaction

PAL works by making inferences from modalities such as gesture, gaze, and body posture in addition to analyzing speech. These data points then collectively query a large language model (LLM) that delivers the appropriate reaction for the robot to follow. The final actions that PAL executes keep the human in mind and are both safe and non-intrusive, making the robot a smarter and more reliable partner.

Dr. Madison Clark-Turner

Robotics Scientist and Principal Investigator on PAL

Understanding intent through contextual cues for trustworthy collaboration

A robot that can draw on contextual cues from a human and then respond in a courteous manner, however, can encourage trust and more efficient collaboration. PAL interprets both verbal and nonverbal inputs from its human partner to make contextual leaps regarding user intent.

For example, if a human asks a robot, “Can you bring me that?”, the robot will need to interpret what the human is pointing at. Or if a human asks a robot to place a tool back in its original spot, the robot will need to look back through its history of actions to determine the tool’s original location. PAL also factors in user gaze and posture to infer engagement, deducing when to follow up and interact with the human and when to leave them alone to focus.

PAL’s LLM is based upon a publicly available language model. The foundation model performs well at question asking but produces unstructured output, so the team is enhancing with customer methods to meet their specific needs. PAL also leverages Charles River expertise in physiological sensing, user experience design, and assistive robotics..

Expanding human-robot collaboration for real-world, unstructured environments

PAL is especially useful in today’s robotics applications, which have moved away from traditional, physically enclosed or caged robots. These large unstructured environment are difficult to describe and are often better navigated by humans, making intuitive human-robot interaction essential.

On the other hand, we might not always have the strength or the endurance to complete heavy lifts or repetitive tasks. “There is absolutely a merging of human-robot worlds that can happen, and we are primed to make this as smooth a transition as possible with PAL,” Clark-Turner adds.

After completing initial development, which included modalities of gaze, gesture, and speech, the next phase will include additional modalities such as body posture and informative gestures (such as directions for movement of objects).

PAL is also developing general skills such as manipulation tasks—picking up, rotating, and handing things to the user. These skills are essential for assembly, maintenance, repair, and manufacturing across diverse environments on land, underwater, and in space. “We see a broad roadmap of applications down the line, everything from assistive medical care to industrial operations to help with repairs and maintenance,” Clark-Turner says. Another potential application: helping NASA execute missions in space.

Contact us to learn more about PAL and our other capabilities in robotics and autonomy.